[Dave Niewinski] clearly knows a thing or two about robots, judging from his YouTube channel. Usually the projects involve robot arms mounted on some sort of wheeled platform, but this time it’s the tune of some pretty famous yellow robot legs, in the shape of spot from Boston Dynamics. The premise is simple — tell the robot what snacks you want, entirely by voice command, and off he goes to fetch. But, we’re not talking about navigating to the fridge in the same room. We’re talking about trotting out the front door, down the street and crossing roads to visit favorite restaurant. Spot will the order snacks and bring them back. Fully autonomously.

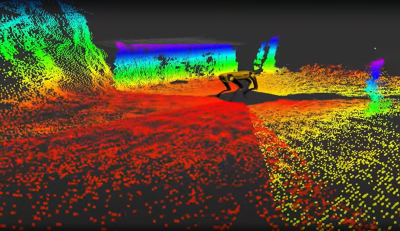

There are multiple things going here, all of which are pretty big computational tasks. Firstly, there is no cloud-based voice control, ala Google voice or Alexa. The robot works on the premise of full autonomy, which means no internet connectivity for any aspect. All voice recognition, voice-to-text, and speech synthesis are performed locally using the Nvidia Riva GPU-based AI speech SDK, running on the local Nvidia Jetson AGX Oren carried of spot’s back. A front-facing webcam supplies the audio feed for this. The voice recognition application listens for the wake phrase, then turns the snack order into text, for later replay when it gets to the destination. Navigation is taken care of with a Microstrain RTK GNSS module, which has all the needed robustness, such as dual antennas, and inertial fallback for those regions with a spotty signal. Navigation is no use out in the real world on its own, which is where spot’s depth sensor cameras come in. These enable local obstacle avoidance, as per the usual spot behavior we’ve all seen before. But what about crossing the road without getting tens of thousands of dollars of someone else’s hardware crushed by a passing truck? Spot’s onboard streaming cameras are fed into the Nvidia dash cam net AI platform which enables real-time recognition of moving obstacles such as cars, humans and anything else that might be wandering around and get in the way. All in all a cool project showing the future potential of AI in robotics for important tasks, like fetching me a beer when I most need it, even if it comes from the local corner shop.

We love robots around here. Robots can mow your lawn, navigate inside your house with a little help from invisible QR Codes, even help out with growing your food. The robot-assisted future long promised, may now be looking more like the present.

Need A Snack From Across Town? Send Spot!

Source: Manila Flash Report

0 Comments