Should you wish to try high-quality voice recognition without buying something, good luck. Sure, you can borrow the speech recognition on your phone or coerce some virtual assistants on a Raspberry Pi to handle the processing for you, but those aren’t good for major work that you don’t want to be tied to some closed-source solution. OpenAI has introduced Whisper, which they claim is an open source neural net that “approaches human level robustness and accuracy on English speech recognition.” It appears to work on at least some other languages, too.

If you try the demonstrations, you’ll see that talking fast or with a lovely accent doesn’t seem to affect the results. The post mentions it was trained on 680,000 hours of supervised data. If you were to talk that much to an AI, it would take you 77 years without sleep!

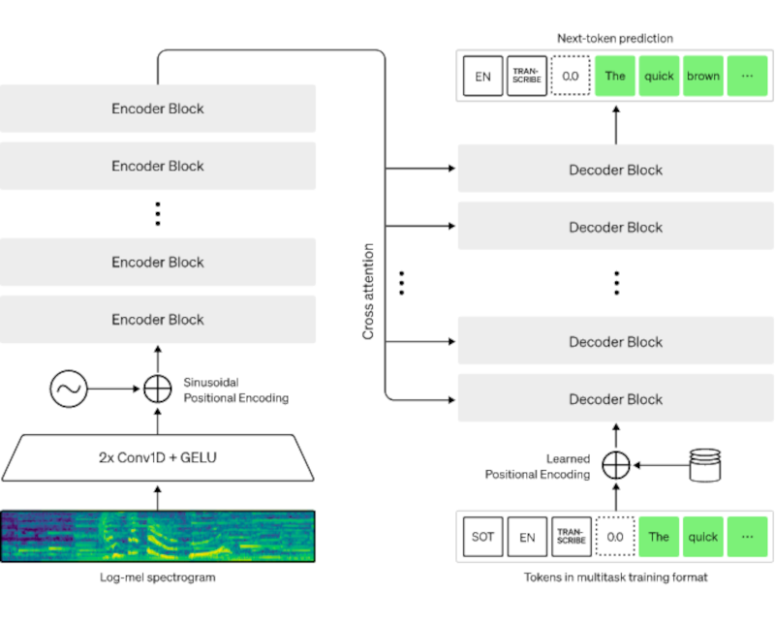

Internally, speech is split into 30-second bites that feed a spectrogram. Encoders process the spectrogram and decoders digest the results using some prediction and other heuristics. About a third of the data was from non-English speaking sources and then translated. You can read the paper about how the generalized training does underperform some specifically-trained models on standard benchmarks, but they belive that Whisper does better at random speech beyond particular benchmarks.

The size of the model at the “tiny” variation is still 39 megabytes and the “large” variant is over a gig and half. So this probably isn’t going to run on your Arduino any time soon. If you do want to code, though, it is all on GitHub.

There are other solutions, but not this robust. If you want to go the assistant-based route, here’s some inspiration.

OpenAI Hears You Whisper

Source: Manila Flash Report

0 Comments