You might not have heard about Stable Diffusion. As of writing this article, it’s less than a few weeks old. Perhaps you’ve heard about it and some of the hubbub around it. It is an AI model that can generate images based on a text prompt or an input image. Why is it important, how do you use it, and why should you care?

This year we have seen several image generation AIs such as Dall-e 2, Imagen, and even Craiyon. Nvidia’s Canvas AI allows someone to create a crude image with various colors representing different elements, such as mountains or water. Canvas can transform it into a beautiful landscape. What makes Stable Diffusion special? For starters, it is open source under the Creative ML OpenRAIL-M license, which is relatively permissive. Additionally, you can run Stable Diffusion (SD) on your computer rather than via the cloud, accessed by a website or API. They recommend a 3xxx series NVIDIA GPU with at least 6GB of RAM to get decent results. But due to its open-source nature, patches and tweaks enable it to be CPU only, AMD powered, or even Mac friendly.

This touches on the more important thing about SD. The community and energy around it. There are dozens of repos with different features, web UIs, and optimizations. People are training new models or fine-tuning models to generate different styles of content better. There are plugins to Photoshop and Krita. Other models are incorporated into the flow, such as image upscaling or face correction. The speed at which this has come into existence is dizzying. Right now, it’s a bit of the wild west.

How do you use it?

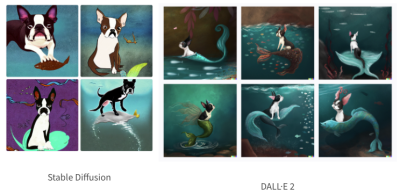

After playing with SD on our home desktop and fiddling around with a few of the repos, we can confidently say that SD isn’t as good as Dall-E 2 when it comes to generating abstract concepts.

Images generated by Nir Barazida

That doesn’t make it any less incredible. Many of the incredible examples you see online are cherry-picked, but the fact that you can fire up your desktop with a low-end RTX 3060 and crank out a new image every 13 seconds is mind-blowing. Step away for a glass of water, and you have ~15 images to sift through when you come back. Many of them are decent and can be iterated on (more on that later).

If you’re interested in playing around with it, go to huggingface, dreamstudio.ai, or Google collab and use their web-based interface (all currently free). Or follow a guide and get it set up on your machine (any guide we write here will be woefully out of date within a few weeks).

The real magic of SD and other image generation is human and computer interaction. Don’t think of this as a “put in a thing, get a new thing out”; the system can loop back on itself. [Andrew] recently did this, starting with a very simple drawing of Seattle. He fed this image into SD, asking for “Digital fantasy painting of the Seattle city skyline. Vibrant fall trees in the foreground. Space Needle visible. Mount Rainier in background. Highly detailed.”

Hopefully, you can tell which one [Andrew] drew and which one SD generated. He fed this image back in, changing it to have a post-apocalyptic vibe. He then draws in a simple spaceship in the sky and asks SD to turn it into a beautiful spaceship, and after a few passes, it fits into the scene beautifully. Adding birds and a low-strength pass brings it together in a gorgeous scene.

SD struggles with consistency between generation passes, as [Karen Cheng] demonstrates in her attempt to change a video of someone walking to have a different outfit. She combines the output of Dalle (SD should work just fine here) with EBSynth, an AI good at taking one modified image and extrapolating how it should apply to subsequent frames. The results are incredible.

6/ And it turns out, it DOES work for clothes!

It's not perfect, and if you look closely there are lots of artifacts, but it was good enough for me for this project pic.twitter.com/Scl2as7lhJ

— Karen X. Cheng (@karenxcheng) August 30, 2022

Ultimately, this will be another tool to express ideas faster and in more accessible ways. While what SD generates might not be used as final assets, it could be used to generate textures in a prototype game. Or generate a logo for an open-source project.

Why should you care?

Hopefully, you can see how exciting and powerful SD and its accompanying cousin models are. If a movie had contained some of the demos above just a few years ago, we likely would have called out the movie for being Hollywood magic.

Time will tell whether we will continue to iterate on the idea or move on to more powerful techniques. But there are already efforts to train larger models with tweaks to understand the world and the prompts better.

Open-source is also a bit of a double-edged sword, as anyone can take it and do whatever they want. The license on the model forbids its use for many nefarious purposes, but at this point, we don’t know what sort of ramifications it will have long term. Looking ten or fifteen years down the road becomes very murky as it is hard to imagine what could be done with a version that was 10x better and ran in real-time.

We’ve written about how Dall-E impacts photography, but this just scratches the surface. So much more is possible, and we’re excited to see what happens. All we can say is it is satisfying looking at a picture that makes you happy and knowing it was generated on your computer.

Stable Diffusion And Why It Matters

Source: Manila Flash Report

0 Comments