By now, you’ve surely seen the AI tools that can chat with you or draw pictures from prompts. OpenAI now has Point-E, which takes text or an image and produces a 3D model. You can find a few runnable demos online, but good luck having them not too busy to work.

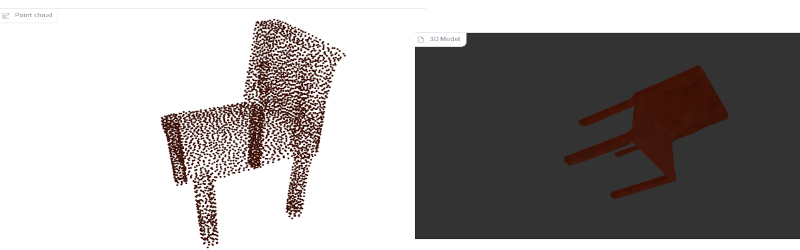

We were not always impressed with the output. Asking for “3d printable starship Enterprise,” for example, produced a point cloud that looked like a pregnant Klingon battle cruiser. Like most of these tools, the trick is finding a good prompt. Simple things like “a chair” seemed to work somewhat better.

Is this going to put 3D designers out of business? We think it isn’t very likely, at least for engineering purposes. Unlike a visual image, most 3D models need to be exact and have interfaces to other things. Maybe one day, the AI can do like a Star Trek computer or replicator, just making what you want from a hazy description. But, for today, it might be more useful to train an AI to examine an existing design and help identify problem areas for printing or improve support structures and orientation. That seems more realistic.

On the other hand, we understand this is the early days for these tools. But at the current state, asking if this will replace humans is like wondering if parrots will replace radio disk jockeys. The writing and 3D modeling is, generally, not precise enough. If you wanted an artistic model or a piece of clip art, you might be able to get away with using a tool like this. Still, we are far from AI replacing a writer, a photographer, a graphic artist, or a 3D designer for most practical purposes.

Our opinion: computers work best when they boost human creativity, not replace it. Texturing, for example. Our own [Jenny List] has taken us through the search engine aspects of AI, too.

3D Modelling in English with AI

Source: Manila Flash Report

0 Comments