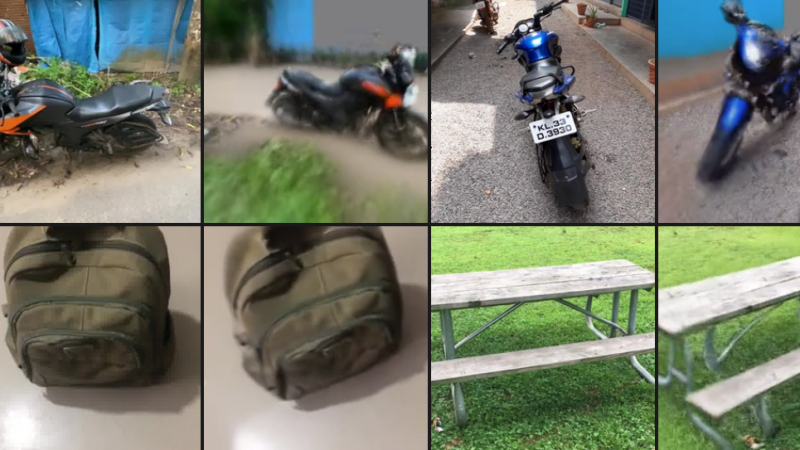

ZeroNVS is one of those research projects that is rather more impressive than it may look at first glance. On one hand, the 3D reconstructions — we urge you to click that first link to see them — look a bit grainy and imperfect. But on the other hand, it was reconstructed using a single still image as an input.

How is this done? It’s NeRFs (neural radiance fields) which leverages machine learning, but with yet another new twist. Existing methods mainly focus on single objects and masked backgrounds, but a new approach makes this method applicable to a variety of complex, in-the-wild images without the need to train new models.

There are a ton of sample outputs on the project summary page that are worth a browse if you find this sort of thing at all interesting. Some of the 360 degree reconstructions look rough, some are impressive, and some are a bit amusing. For example indoor shots tend to reconstruct rooms that look good, but lack doorways.

There is a research paper for those seeking additional details and a GitHub repository for the code, but the implementation requires some significant hardware.

Synthesizing 360-degree Views From Single Source Images

Source: Manila Flash Report

0 Comments